GlusterFS is a network-attached file system which effectively allows you to share two drives across multiple devices on the network. This file system is a perfect fit for Vultr’s block storage offering, as you’re able to share a drive across the network which isn’t possible out-of-the-box.

In terms of features, extensibility and reliability, GlusterFS has proven to be one of the most sophisticated and stable file systems available.

When changes are made to the drive on one server, they will automatically be replicated to the other server in real-time. In order to achieve this and follow this guide, you will need:

- Two Vultr cloud instances, preferably running the same operating system.

- Two block storage drives of the same size.

After having ordered these two block storage drives, you should attach them to VM 1 and VM 2. As we will be using both block storage drives for the same file system, dividing the total size of both drives by two will give you the usable amount of GB’s. For example, if you have two 100 GB drives, 100 GB will be usable (100 * 2 / 2).

Furthermore, both VM’s will need to be in the same location in order for them to be on the same private network. We will be connecting to the servers using their internal IP addresses. Note that we will wipe the data on the block storage drive. Make sure they’re brand new and unformatted.

In this guide, we will be using storage1 and storage2, respectively with private IP addresses 10.0.99.10 and 10.0.99.11. Your server names and IP addresses will most likely differ, so make sure to change them in the process of setting up GlusterFS.

This guide was written with CentOS / RHEL 7 in mind. However, GlusterFS is relatively cross compatible across multiple Linux distributions.

Setting up GlusterFS

Step 1: Alter the /etc/hosts file

For us to be able to quickly connect to the respective instances, we should add easy-to-remember names to the hosts file. Open up the /etc/hosts file and add the following lines to the bottom of it:

10.0.99.10 storage1

10.0.99.11 storage2

Step 2: Add the disk on storage1

SSH into storage1 and execute the following commands. By default, attached block storage drives are mounted as /dev/vdb. If this differs in your case for any reason, you should change it in commands below.

Format the disk:

fdisk /dev/vdb

Hit “enter” for the following three questions (regarding partition size and the like, we want to use all available space on the block storage drives) and write “w” to write these changes to the disk. After this has been successfully completed, write:

/sbin/mkfs.ext4 /dev/vdb1

We’ve went ahead and created a file system now as Vultr does not create any file systems on block storage by default.

Next, we’re going to create the folder we will be storing our files on. You can change this name but you won’t see it appear a lot so for the sake of eliminating complexity, I recommend leaving it alone.

mkdir /glusterfs1

To automatically mount the drive on boot, open /etc/fstab and add the following line at the bottom of the file:

/dev/vdb1 /glusterfs1 ext4 defaults 1 2

Finally, mount the drive:

mount -a

The mounting will stay persistent across reboots, so when you reboot your server the drive will automatically be mounted again.

Step 3: Add the disk on storage2

Now that we have the disk added and mounted on storage1, we need to create the disk on storage2 as well. The commands barely differ. For fdisk, follow the same steps as above.

fdisk /dev/sdb

/sbin/mkfs.ext4 /dev/sdb1

mkdir /glusterfs2

Edit /etc/fstab and add the following line:

/dev/vdb1 /glusterfs2 ext4 defaults 1 2

Just like on storage1, the drive will automatically be mounted across reboots.

Mount the drive:

mount -a

Finally, let’s check if we can see the partition show up:

df -h

You should see your drive show up here. If it doesn’t, please attempt to follow the steps from above.

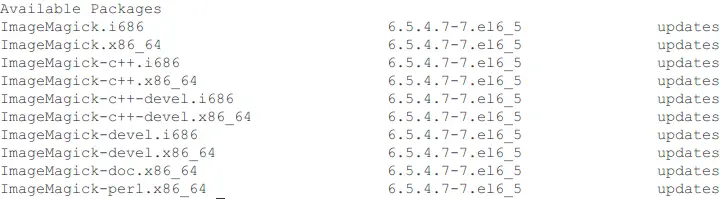

Step 4: Installing GlusterFS on storage1 and storage2

We need to install GlusterFS next. Add the repository and install GlusterFS:

rpm -ivh http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-5.noarch.rpm

wget -P /etc/yum.repos.d http://download.gluster.org/pub/gluster/glusterfs/3.7/3.7.5/CentOS/glusterfs-epel.repo

yum -y install glusterfs glusterfs-fuse glusterfs-server

There is a chance that you’ll get an error from yum because the signature for the repository isn’t right. In that case, it’s safe to force not checking for the GPG signature:

yum -y install glusterfs glusterfs-fuse glusterfs-server --nogpgcheck

On both servers, execute the following commands to start GlusterFS right now and start it automatically after a reboot:

systemctl enable glusterd.service

systemctl start glusterd.service

If you use an older version of CentOS, you can use the service and chkconfig commands:

chkconfig glusterd on

service glusterd start

Step 5: Disabling the firewall on storage1 and storage2

Although it’s not the best solution per se, it’s a good idea to turn off the firewall in order to eliminate possible conflicts with blocked ports. If you don’t feel comfortable doing this, then feel free to alter the rules to your liking, but due to the nature of GlusterFS, I highly recommend disabling the firewall. Considering a private network is actually private on Vultr (and you don’t need to firewall out other customers) you could simply block all incoming traffic from the internet and restrict allowed connections to the private network. However, turning off the firewall and not altering any other system configurations would suffice too:

systemctl stop firewalld.service

systemctl disable firewalld.service

In case you use an older CentOS version which does not support systemctl, use the service and chkconfig commands:

service firewalld stop

chkconfig firewalld off

In case you don’t use firewalld, try disabling iptables:

service iptables stop

chkconfig iptables off

Step 6: Add servers to the storage pool

After turning off the firewall, we’re able to add both servers to the storage pool. This is a pool consisting of all available storage. Execute the following command on storage1:

gluster peer probe storage2

What this command does, is add storage2 to its own storage pool. By executing the following command on storage2, both drives will be in sync:

gluster peer probe storage1

After executing this on both servers, we should check the status on both servers:

gluster peer status

Both servers should show a state of “Peers: 1”. A common mistake is people expecting to see Peers: 2, but as storage1 will be peering with storage2 and vice versa, they do not peer with themselves. Therefore, Peers: 1 is what we need.

Step 7: Creating a shared drive on storage1

Now that both servers are able to connect with each other through GlusterFS, we’re going to create a shared drive.

On storage1, execute:

gluster volume create mailrep-volume replica 2 storage1:/glusterfs1/files storage2:/glusterfs2/files force

The volume has now been created. In GlusterFS, you need to “start” a volume so it’s actively shared across multiple devices. Let’s start it:

gluster volume start mailrep-volume

Next, pick a folder that should be on the volume and replicated across both servers. In this tutorial we’ll be using the folder /var/files. Naturally this can be anything you like. Create it on storage1 only:

mkdir /var/files

Next, mount it:

mount.glusterfs storage1:/mailrep-volume /var/files/

Update /etc/fstab so the drive will automatically be mounted on boot. Add the following:

storage1:/mailrep-volume /var/files glusterfs defaults,_netdev 0 0

Remount the drive:

mount -a

Step 8: Creating a shared drive on storage2

Now that we’ve created a shared drive on storage1, we need to create one on storage2 as well. Create a folder with the same location / path and name:

mkdir /var/files

mount.glusterfs storage2:/mailrep-volume /var/files/

Just like on storage1, add the following line to /etc/fstab:

storage2:/mailrep-volume /var/files glusterfs defaults,_netdev 0 0

Remount the drive:

mount -a

Step 9: Test the shared storage

Navigate to the /var/files folder on storage1 and create a file:

cd /var/files

touch created_on_storage1

Next, head over to the storage2 server. Execute ls -la and you should see the file created_on_storage1 appear.

On storage2, navigate to the /var/files folder and create a file:

cd /var/files

touch created_on_storage2

Go back to storage1 and execute ls -la /var/files. You should see the file created_on_storage2 appear here.

Step 10: Reboot all servers (Optional)

In order to double-check if your setup will stay persistent across reboots, as a best practice, you should reboot all servers. Like mentioned, you should wait for one server to be up and then reboot the other for the shared drive to automatically be mounted.

Reboot storage1 first, wait for it to be up, then reboot storage2. Now login to and execute on both servers:

cd /var/files

ls -la

You should now see both files appear. Make sure to start off without any files on the volume, so remove the test files we’ve created. You can do this on storage1, storage2 or both. Changes will be replicated instantly:

cd /var/files

rm created_on_storage1

rm created_on_storage2

You should have an identical shared volume across both servers, regardless of the actions on both volumes.

You have now setup a full-fledged GlusterFS setup with 100 GB (or more) of usable space. In case you need more in the future, the setup is easily scalable to add more capacity and/or more servers should your workload require this.

Thank you for reading!

Important note regarding remounting

GlusterFS allows for keeping your data up-to-date on two drives. However, you should note that when both servers get rebooted at the same time, you will need to force mount the drives on both servers. You must force the mount manually by executing the following command:

gluster volume start mailrep-volume force

That is because one of the servers acts as a server and the other acts as a client. Although the difference isn’t noticeable very easily in practice, this means that when you need to reboot both servers, you should reboot one, wait until it’s up, then boot the other one.

Important note regarding backups

Even though your data will be replicated across two drives, you should have your data replicated at least thrice. Although your data is better protected against data corruption and the like, you should note that changes are instant and you will in no way be protected against human error. When you remove all files on one drive, these changes will immediately be replicated to the other drive, meaning your data would be wiped on both instances.

Luckily, there are multiple approaches to avoid this. First off, I recommend enabling backups on your cloud instance itself. Though these backups do not include data on the block storage it will protect data on the instance itself.

When it comes to backing up the data on the block storage itself, I recommend spinning up a separate instance (for example a SATA plan) so you can run a backup from one of the two attached servers every night, for example. This way your data will be safe on another separate device.

FAQ

Am I able to increase my disk storage?

You are able to increase block storage size from the Vultr control panel. You should resize the disk inside the operating system after, but that is out of the scope of this article.

Can I attach the block storage to over two servers?

Although this guide has been written for two servers (thus two block storage drives attached to both servers) but it’s possible to take this guide and use it for over two servers as well. A setup with over two servers / drives could look like this for 6 servers, for example:

VM: storage1

VM: storage2

VM: storage3

VM: storage4

Block Storage: attached to storage1

Block Storage: attached to storage2

Block Storage: attached to storage3

Block Storage: attached to storage4

Given that all block storage drives have a capacity of e.g. 200 GB, you would get 200 * 4 / 4. In other words, the usable space is always the capacity of a single block storage drive. That is because one server with block storage attached is treated as the “master” by GlusterFS and it’s replicated across the other servers. However, the setup is built to be able to survive without a master server, which makes it such a redundant and reliable, stable solution.

Want to contribute?

You could earn up to $300 by adding new articles

Suggest an update

Request an article